Engineering Development

A showcase of major milestones in PanelForge's evolution, documenting the collaboration between human creativity and AI capability.

Human + AI Collaboration

How we build features together

PanelForge represents a new model of software development: deep collaboration between a human developer and an AI pair programmer. Each spotlight below documents not just what was built, but how we built it together.

Vision, architecture decisions, user experience insights, project management, and real-world workflow knowledge.

Implementation, code consistency, systematic refactoring, documentation, and exploring technical trade-offs.

Iterative refinement, problem-solving discussions, quality assurance, and building something neither could alone.

Milestone Spotlights

GPT Image 1.5: Minutes After OpenAI Release

OpenAI announced gpt-image-1.5 at ~6pm CET. By 6:30pm, PanelForge had full production support. This isn't luck - it's architecture. Our modular multi-provider system, built during the Gemini 3 Pro sprint, meant adding OpenAI's new flagship required exactly 8 file changes and zero service modifications.

Built By

The Story

The news dropped.

OpenAI released gpt-image-1.5 on December 17, 2025:

- 4x faster generation

- 20% cheaper than gpt-image-1

- Better instruction following

- Improved text rendering

- First 5 input images get high fidelity (vs 1 for older models)

The question wasn't "can we support this?" - it was "how fast?"

The answer: 30 minutes.

We'd already done the hard work during the Gemini 3 Pro integration in November. That sprint forced us to refactor from a monolithic single-provider system to a modular multi-provider architecture.

The key insight: All OpenAI image models share the same API surface. gpt-image-1, gpt-image-1-mini, and now gpt-image-1.5 all use the same endpoints, same parameters, same response format.

So when we built OpenAIImageGenerationService, we made it model-agnostic:

// Routes ALL gpt-* models - no specific model checks needed

$postData = [

'model' => $model, // Just pass through whatever model is selected

'prompt' => $prompt,

// ... same params for all models

];The implementation was pure configuration:

- Add to SettingsSchema options

- Add to FormRequest validation rules

- Add to pricing config

- Update frontend model detection

That's it. No service changes. No new classes. No refactoring.

Key Features

4x faster generation

20% cheaper than gpt-image-1

Better instruction following

Improved text rendering

First 5 images get high fidelity

30-minute integration time

Zero service code changes

Same-day production support

Technical Implementation

December Sprint: AI Rephrase, Performance & Mobile Fixes

An intensive sprint delivering the AI Prompt Rephrase system, systematic N+1 query optimizations across the entire frontend, and critical iOS Safari mobile fixes. This spotlight showcases how rapid iteration with AI collaboration can ship production features, resolve performance bottlenecks, and fix platform-specific bugs in a single focused sprint.

Built By

The Story

Three challenges converged in December:

1. Prompt Crafting Was Manual and Tedious

Users wrote prompts for image generation, but transforming a casual description into a camera-ready shot description required expertise. "A guy walking in rain" could become something much more evocative with proper cinematographic language - but who has time to learn that?

2. Performance Was Silently Degrading

N+1 queries had crept in across Gallery, Chat, and the Jobs page. Each page load triggered dozens of extra database queries. With growing data volumes, response times were increasing imperceptibly - until they weren't imperceptible anymore.

3. iOS Safari Touch Was Broken

After keyboard dismiss on the Chat page, the entire page became unresponsive to touch. Users had to switch apps and come back just to continue typing. This was WebKit bug #176454 - nested overflow-hidden elements creating touch "dead zones".

The Rephrase System: From Concept to Production in 48 Hours

The idea started simple: what if AI could transform casual prompts into professional shot descriptions? We had the Gemini models, we had the async job architecture from Chat. Why not apply it to prompts?

Day 1: Built the core ProcessRephraseJob following Chat's async pattern - broadcast "thinking" event, call Gemini, broadcast result. Added RephraseAttempt model for tracking status and costs.

Night 1: Realized users needed guidance on how to rephrase. Created SystemPrompt model with admin-configurable presets. The "Scene Clarifier" preset was born - transforming scene descriptions into camera-ready shots.

Day 2: Frontend modals (RephrasePresetModal, RephraseResultModal), image context support (attach 2 images for smarter transformations), and the RephraseAnalytics page. Complete feature shipped.

The N+1 Hunt: Systematic Performance Optimization

Armed with Laravel Debugbar, we profiled every major page:

- Gallery: 47 queries → 8 queries (eager loading relationships)

- Chat: 23 queries → 6 queries (conversation.messages eager load)

- Jobs page: 31 queries → 4 queries (batch loading with

with())

The pattern was always the same: iterate over a collection, access a relationship, trigger a query. The fix was always the same: add the relationship to with() in the initial query.

The iOS Safari Fix: Debugging WebKit in Production

The touch bug was maddening. It only happened after keyboard dismiss. It only happened in Safari. It only happened with our specific DOM structure.

The culprit: nested overflow-hidden elements. WebKit bug #176454 causes touch events to stop propagating through certain overflow configurations. The fix required three changes:

- GPU layer forcing with

translateZ(0)to trigger repaint touch-action: pan-yfor explicit touch handling- Momentum scrolling via

-webkit-overflow-scrolling: touch

We also fixed pull-to-refresh incorrectly triggering on Chat and added the AppLayout provide/inject pattern for explicit PTR disable on specific pages.

Key Features

Transform casual descriptions into professional shot language with Scene Clarifier and other admin-configurable presets.

Choose between Flash Lite 2.5 (fast), Flash 2.5 (balanced), or Pro 3 (quality) for rephrase operations.

Attach up to 2 images when rephrasing for more intelligent, context-aware transformations.

Systematic N+1 elimination across Gallery, Chat, and Jobs pages through eager loading patterns.

Resolved WebKit bug #176454 with GPU layer forcing, touch-action, and momentum scrolling.

Side-by-side and slider comparison modes with 15x zoom for detailed image inspection.

Moderation UI distinguishes between permanent rejections and transient failures with filters.

DialogDescription added to all modal components for screen reader compatibility.

Technical Implementation

Redis Memory Bloat: Mobile Debugging on the Metro

A production crisis turned into a showcase of rapid prototyping. With the server throwing 500 errors and Redis consuming 4.49GB, David diagnosed and fixed the issue via iPhone SSH while commuting on the Copenhagen Metro. Claude Code ran directly on the production server, implementing a 90% memory reduction before arriving at work. This is the YOLO approach - only appropriate for hobby projects with backups.

Built By

The Story

The server was dying. 500 errors everywhere. Redis screaming:

MISCONF Redis is configured to save RDB snapshots, but it's currently unable to persist to diskThe diagnosis:

- Disk at 93% full (only 2.6GB free on 38GB)

- Redis consuming 4.49GB of memory

- 79,609 MISCONF errors logged in one day

gemini:chat:*keys = 4.3GB (96% of Redis!)

The root cause? Model-generated images with "thought signatures" were being stored as full base64 in Redis. Each conversation could be 60-100MB. With 485 conversations, Redis was bloated beyond capacity.

Here's where it gets interesting.

This entire debugging session happened via iPhone SSH. David was on the Copenhagen Metro, heading to work, using Termius to SSH into the production server. Claude Code was running directly on the server instance.

The YOLO preface: This approach is explicitly NOT recommended for production applications with external users. But for a hobby project with automated Hetzner backups? YOLO. For rapid prototyping, there's no equivalent to fixing issues on the spot.

The investigation unfolded:

First, immediate triage - freed disk space by removing Redis temp files (2.8GB), old logs, and Docker images. Disk dropped from 93% to 86%.

Then the deep dive. We found that FileReferenceService.ts was deliberately skipping model-generated images:

if (part.thoughtSignature || part.thought) {

console.log('[FILE_REF] Keeping model-generated image as base64...');

continue; // THIS KEEPS 1-10MB PER IMAGE IN REDIS

}But why? The Gemini SDK strips thoughtSignature from fileData parts during serialization. This field is critical for multi-turn conversations - without it, Gemini returns 400 errors. So these images HAD to stay as inlineData (base64).

The insight: We can't use Gemini's File API for thought-signed images, but we CAN store the image binary locally and keep only a reference in Redis. Same pattern as the File API fix, but for a different constraint.

Key Features

Entire investigation and fix done via iPhone SSH (Termius) while commuting on the Copenhagen Metro.

Redis dropped from 4.49GB to 450MB - thought-signed images now stored on disk instead of inline.

941 images migrated across 195 conversations with complete data preservation.

Gemini strips thoughtSignature from fileData parts - our local storage approach preserves them.

Migration script safe to run multiple times - already-dehydrated images are automatically skipped.

Images stored in chat-history directory with SHA-256 hash filenames for automatic deduplication.

From crisis to fix in ~2 hours while commuting. The YOLO approach works for solo projects with backups.

Hetzner automated backups enabled bold debugging - would never do this without recovery options.

Technical Implementation

Gemini File API: From OOM Crash to 64MB in 15 Minutes

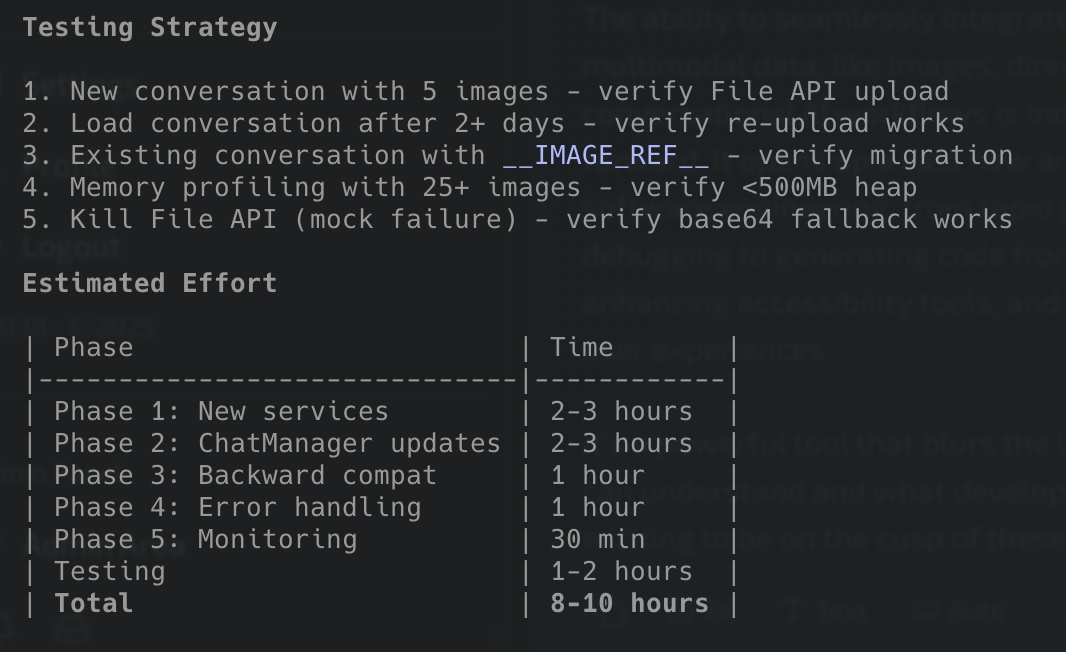

A production server crash led to an architectural revelation. The Express Gemini microservice was loading every image in a conversation into memory - 21 images meant 2GB heap and a crash. Together, we diagnosed the root cause, discovered Gemini File API as the solution, and implemented a 97% memory reduction in under 15 minutes. The AI estimated 8-10 hours for the fix.

Built By

The Story

A chat message failed on production. The curl response was unusual - empty reply from server.

Investigating the logs revealed the truth:

FATAL ERROR: Reached heap limit Allocation failed

- JavaScript heap out of memoryThe Express Gemini microservice had crashed. Not a network blip. Not a Gemini API issue. Out of memory.

Digging deeper into the container logs showed the crash happened while processing conversation 225 - a conversation with 21 images. The heap was at 1.9GB when it died.

The architecture flaw was clear once we saw it:

Every message, the rehydrateHistory() function loaded ALL images from Redis into memory as base64. 21 images × ~100KB × 3-4 deep clones during processing = 2GB+ heap.

The container auto-restarted and the retry worked, but this was a ticking time bomb. Any conversation with enough images would crash the server.

"How do other services handle this?"

That question changed everything. ChatGPT, Claude, every major AI service handles image-heavy conversations - they don't load everything into memory every time.

The answer: cloud storage + URLs. Upload files to a service, pass just the URI to the model. The model fetches what it needs.

Then we discovered Gemini File API.

Google provides a free File API where you can upload images and reference them by URI. The Gemini SDK accepts these URIs directly - no need to load base64 into memory at all. Files expire after 48 hours, but we could keep local backups and re-upload on demand.

Here's where it gets interesting.

I wrote a comprehensive implementation plan with phases, error handling strategies, monitoring endpoints, and testing criteria. At the bottom:

8-10 hours estimated.

But we didn't need 8-10 hours. We had context. We understood the problem. We knew exactly what needed to change.

The implementation took 15 minutes.

Two new service files. Updates to the ChatManager. Debug endpoints. The entire architecture shift - from "load everything into memory" to "pass URIs to Gemini" - done in a single focused session.

Key Features

Images uploaded to Google's servers and referenced by URI - free service with 48-hour TTL.

From 2GB heap crashes to 64MB stable operation - URIs in memory instead of base64 blobs.

Files expire after 48 hours - local backups enable transparent re-upload on demand.

Upload results cached per API key hash - avoid duplicate uploads for the same user.

Legacy __IMAGE_REF__ format auto-migrates to new __FILE_REF__ format on first access.

Transient failures handled with exponential backoff - 1s, 2s, 4s delays before failing.

/debug/memory and /debug/conversation/:id for monitoring heap usage and reference counts.

From crash investigation to working fix in under 15 minutes - AI estimated 8-10 hours.

Technical Implementation

TypeScript Type Safety: From 200+ Errors to Zero

A systematic sweep through the entire Vue 3 codebase to achieve full TypeScript type safety. What started as 200+ type errors across 20+ files became zero errors through careful analysis of shadcn-vue/reka-ui component types, Vue 3 reactivity patterns, and Pinia store interfaces. The result: a codebase where the compiler catches bugs before users do.

Built By

The Story

Running npm run type-check produced a wall of red. 200+ TypeScript errors across the Vue 3 codebase.

The errors weren't random - they clustered around specific patterns:

• AcceptableValue unions - shadcn-vue's Select components emit values that include bigint, but our handlers only accepted string | number. Every dropdown was a potential type mismatch.

• Readonly array friction - Pinia stores return readonly() arrays for immutability, but child components expected mutable arrays. Props that should "just work" threw type errors.

• Template ref typing - Vue 3's template refs and MaybeRef utility types weren't playing nice with our composables. The useRowAwareExpansion hook couldn't accept refs from parent components.

• Interface drift - Local component interfaces like Image had diverged from the canonical galleryStore.Image. Properties were missing, types didn't match, and every file had its own slightly-wrong definition.

• Third-party type gaps - Splide.js and Swiper.js lacked complete TypeScript definitions. We were flying blind on carousel components.

The codebase worked at runtime, but the type system couldn't prove it.

The approach: systematic, not scattered.

Rather than playing whack-a-mole with individual errors, we identified the root patterns and fixed them at the source.

AcceptableValue handlers came first.

shadcn-vue's Select component emits an AcceptableValue type: string | number | bigint | boolean | Record<string, unknown> | null. Our handlers only accepted partial unions. The fix was simple once identified - extend every handler to accept the full union, then coerce to the expected type:

function handleChange(value: AcceptableValue) {

emit('update:value', String(value));

}Applied across GalleryFilterSheet, GalleryPickerModal, ProjectScenePanelSelector, CostDashboard, AnalyticsFilters, and more.

Readonly arrays needed prop flexibility.

When useCostAnalytics returns readonly(trends), passing to <CostTrendChart :trends="trends" /> fails if the prop expects CostTrend[]. The solution: accept both:

interface Props {

trends: readonly CostTrend[] | CostTrend[];

}Applied to CostTrendChart, ModelBreakdownChart, and CostSummaryCards.

Template refs required permissive typing.

useRowAwareExpansion expected Ref<HTMLElement | null>, but parent components passed various ref types. The fix uses Vue's MaybeRef with an escape hatch for complex template refs:

export function useRowAwareExpansion(

gridRef: Ref<HTMLElement | null> | MaybeRef<any>,

itemCount: MaybeRef<number>

)Interface consolidation was the big win.

ImageDetailsSheet.vue had its own 40-line Image interface that was missing metadata, inpaint_attempt_id, and parent_image. Instead of keeping it in sync, we deleted it entirely and imported from galleryStore:

import type { Image } from '@/stores/galleryStore';Then we enriched galleryStore.Image with the missing properties so all consumers benefit.

Third-party types got declaration files.

For Splide.js, we created resources/js/types/splide.d.ts with comprehensive SplideOptions and SplideInstance interfaces. Same for Swiper - making SwiperContainer extend HTMLElement so classList access works.

Edge cases got individual attention:

• KonvaCanvas.vue - Removed .value from template (Vue auto-unwraps refs)

• Settings.vue - Type assertion for dynamic prompt_templates.${index} error keys

• Profile/Edit.vue - Added status and is_admin to User interface

• Inpaint/Create.vue - Fixed typo: props.imageSizes → props.gptModelSizes

• Gallery/Index.vue - Nested panel structure in assignment callback

Key Features

Full `npm run type-check` pass with no errors - the compiler validates every component, composable, and store.

Single source of truth for shared types like Image - import from stores, never redefine locally.

Consistent handler pattern for shadcn-vue Select components that properly handles all possible value types.

Type mismatches caught during development, not in production - props, emits, and function calls all validated.

Change a type definition and immediately see every affected location - no more runtime surprises.

Props accept both mutable and readonly arrays, enabling seamless Pinia store integration.

Custom .d.ts files for Splide.js and Swiper - full IntelliSense for carousel components.

Composables accept flexible ref types - works with ref(), shallowRef(), computed(), and raw values.

Technical Implementation

Gemini Chat with Image Generation

Evolved PanelForge from single-turn image generation to a fully-featured multi-turn chat system with image input/output, conversation forking for message editing, and integrated cost tracking. What started as adding Gemini support evolved into a complete conversation management system with async job processing and real-time WebSocket updates. 31 commits over 3 days delivered a feature that transforms creative workflows from transactional to conversational.

Built By

The Story

Ever tried to refine an AI-generated image?

"Make the sky more dramatic." "Actually, less dramatic." "Can you keep the clouds but change the colors?"

With single-shot generation, you'd start from scratch every time. No memory. No context. No conversation. Just generate → view → repeat.

We wanted something better.

It started simple.

We built an Express microservice to talk to Gemini. Send a message, get a response. Basic chat worked on day one.

Then reality hit.

Gemini takes 5-10 seconds to think. A frozen UI? Unacceptable. So we rebuilt everything around jobs and WebSockets. Now you see a "thinking" animation while Gemini works, and responses stream in the moment they're ready. Night and day difference.

Then users asked the hard question.

"What if I want to edit my earlier message?"

Here's the problem: Gemini maintains "thought signatures" - internal reasoning state that becomes invalid if you change history. You can't just edit in place.

Our solution? Forking. Click edit, and we branch the conversation. Your original stays intact. The fork picks up from that point with your new message. Git for conversations, basically.

Then we hit a wall.

A 10-message chat with images was eating 6MB of Redis. Per conversation. That doesn't scale.

The fix: dehydration. We pull large blobs (images, thought data) out to disk and leave lightweight references in Redis. Same instant load times, 92% less memory. Now conversations can live forever.

Then came the polish. Lots of it.

Model selection arrived next - Gemini 2.5 Flash for quick iterations, Gemini 3 Pro for quality and 4K resolution. Then response modes: text-only, image-only, or let the AI decide.

Resolution and aspect ratio controls followed. Users wanted control over output dimensions without leaving the conversation.

Mobile testing revealed a problem: accidental sends. Tapping Enter on a phone keyboard would fire off half-finished messages. Solution: require Cmd/Ctrl+Enter on desktop, use the send button on mobile.

The AI personality layer.

System instructions let you configure how Gemini behaves. "Be concise." "Focus on comic book art." "Always suggest alternatives." Each conversation can have its own personality.

Default settings came next - your preferred model, resolution, and response mode saved so every new conversation starts the way you like.

We added reasoning display too. When Gemini shows its thought process, you can expand it and see how it arrived at its answer.

Conversation management improvements.

Conversation previews in the sidebar - see the first message and response without opening each one.

Prompt templates: 5 reusable text snippets you define in settings, accessible from a quick menu. Perfect for repetitive instructions.

And the infrastructure work.

Cost tracking integration - every chat message calculates tokens and cost, rolling into your monthly analytics.

Docker containerization for the Express microservice. CI/CD pipeline for deployments.

Better error handling, upstream failure recovery, job lifecycle feedback improvements.

31 commits. Each one solving a real problem we hit while using it.

Key Features

Have natural back-and-forth with AI, building context across multiple messages.

Attach gallery images for context - perfect for "fix this background" requests.

Have Gemini generate images directly in the conversation without leaving chat.

Choose between Gemini 2.5 Flash (fast, economical) or Gemini 3 Pro Image (advanced).

Navigate back to any message and fork the conversation to explore alternatives.

Branch off from any message without losing the original conversation thread.

Configure default AI behavior per conversation (tone, style, expertise level).

Pre-built conversation starters for common creative tasks.

WebSocket broadcasting shows thinking spinner, then response appears instantly.

Every chat interaction recorded in cost dashboard for analytics.

Easy navigation and management of all your conversations.

Cmd/Ctrl+Enter sends messages, streamlining the typing experience.

Pick up any conversation instantly, even after a year. Redis + disk dehydration enables infinite history.

Technical Implementation

Unified Gallery with Hierarchical Navigation

A complete reimagining of how images are organized and navigated in PanelForge. What began as adding flexible hierarchy assignment evolved into a unified Gallery experience with tree navigation, comic book branding, and major architectural consolidation. Two distinct phases delivered a cohesive system spanning 85+ files with ~6,000 lines of changes.

Built By

The Story

The original PanelForge architecture tied images directly to panels through a rigid one-to-one relationship. Every image HAD to belong to a specific panel within a specific scene within a specific project. But comic creation workflows are messy - artists often have reference images that apply to an entire project, scene sketches that aren't panel-specific yet, or generated images that could fit multiple contexts. The rigid structure forced artificial organization decisions. Additionally, navigating large image collections required extensive scrolling with no visual hierarchy representation.

This feature evolved through two distinct phases over an intensive development session. Phase 1 (fa6f955) tackled the database schema - adding project_id and scene_id to images with SQLite-compatible migrations and Eloquent auto-sync events, plus the ProjectScenePanelSelector cascading dropdown component. Phase 2 (b64fe6c+) introduced tree navigation, initially as a dedicated Browse page, but user testing revealed context-switching friction. The QA process led to merging everything back into a unified Gallery with tree navigation embedded - eliminating 2,200+ lines through consolidation while improving the experience. The comic book Panel Forge branding emerged organically during Phase 2, with Bangers and Comic Neue fonts giving the tree a distinctive visual identity. What made this collaboration powerful was the willingness to iterate - recognizing that the "right" architecture emerged through exploration, not upfront planning.

Key Features

Assign images to just a project, project + scene, or the full hierarchy. No more forced panel associations.

Selecting a panel automatically fills in its scene and project. The hierarchy chain is always consistent.

Searchable dropdowns filter dynamically - scenes show only those in the selected project, panels only in the selected scene.

Create new projects, scenes, or panels without leaving your workflow. The 'Create New...' option is always available.

Available everywhere you work: Gallery image modal, Lineage view, Inpainting page, and Generate page.

Gallery cards show color-coded badges indicating where each image lives in your organizational structure.

Tree navigation embedded directly in Gallery - no more context-switching between separate pages.

Collapsible BrowseTree with color-coded nodes (yellow/cyan/fuchsia) and image count badges.

Bangers font for projects, Comic Neue for scenes/panels - distinctive Panel Forge visual identity.

SelectionToolbar, ImageDetailsSheet, and composables enable consistent UX across all pages.

Technical Implementation

More Milestones Coming

As PanelForge evolves, new spotlights will document each major advancement in the journey from v4 to v5 and beyond.